To be held during the 20th Int. Symposium on Methodologies of Intelligent Systems, ISMIS 2012, 4-7 December 2012 Macao (http://www.fst.umac.mo/wic2012/ISMIS/)

Official CFP

Workshop Scope

Over the past 3 years, the Semantic Web activity has gained momentum with the widespread publishing of structured data as RDF. The Linked Data paradigm has therefore evolved from a practical research idea into a very promising candidate for addressing one of the biggest challenges in the area of intelligent information management: the exploitation of the Web as a platform for data and information integration in addition to document search. Although numerous workshops and even Challenges already emerged in the intersection of Data Mining and Linked Data (e.g. Know@LOD at ESWC) and even Challenges have been organized (USEWODs at WWW, http://data.semanticweb.org/usewod/2012/challenge.html), the particular setting chosen (with a highly topical Government-related dataset) will allow to explore new methods of exploiting Linked Data with state-of-the-art mining tools.

Workshop Topic

The workshop consists of an Open Track and of a Challenge Track.

The Open Track will expect submission of regular research papers, describing novel approaches to applying Data Mining techniques on the Linked Data sources.

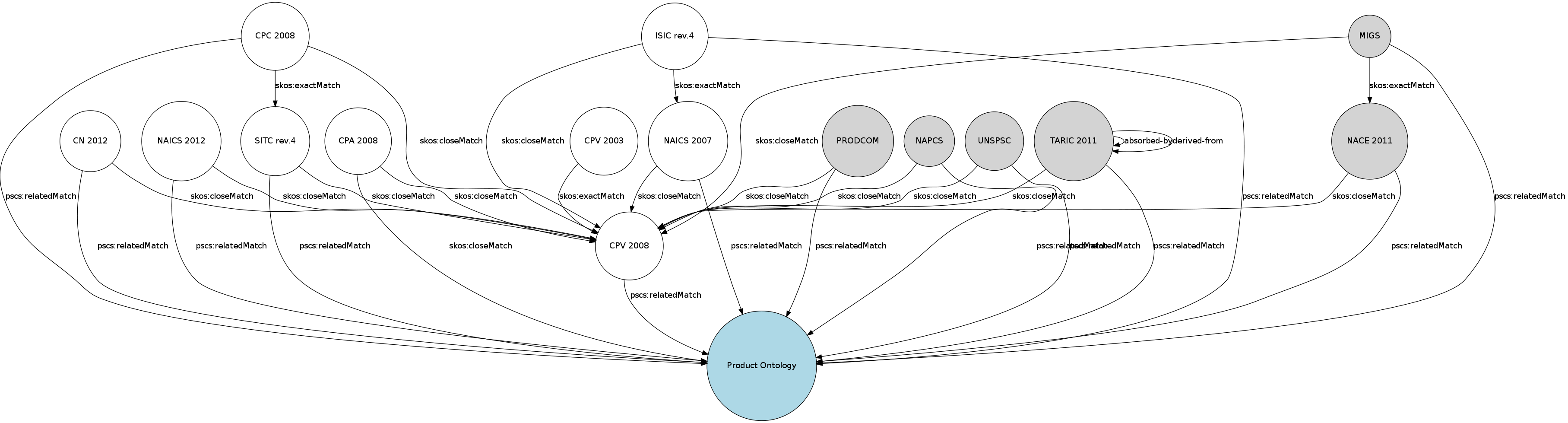

Participation in the Challenge Track will require the participants to download a real-world RDF dataset from the domain of Public Contract Procurement, and accomplish at least one of the four pre-defined tasks on it using their own or publicly available data mining tool. To get access to the data, participants have to register to the Challenge Track at http://keg.vse.cz/ismis2012. Partial mapping to external datasets will also be available, which will allow for extraction of further potential features from the Linked Open Data cloud. Task 1 will amount to unrestricted discovery of interesting nuggets in the (augmented) dataset. Task 2 will be similar but the category of interesting hypotheses will be partially specified. Task 3 will concern prediction of one of the features natively present in training data (but only added to the evaluation dataset after the result submission). Task 4 will concern prediction of a feature manually added to a sample of the data by a team of domain experts.

Participants will submit textual reports (Challenge Track papers) and, for Tasks 3 and 4, also the classification results.

Submissions

Both the research papers (submitted to the Open Track) and the Challenge Track papers should follow the Springer formatting style. The templates for Word and LaTeX are available at the workshop web http://keg.vse.cz/ismis2012 and can be also found at http://www.springer.com/authors/book+authors?SGWID=0-154102-12-417900-0. The length of the submission should not exceed 10 pages. All papers will be made available at the workshop web pages and there will be a post-conference proceedings for selected workshop papers.

Papers (and results for Tasks 3 and 4) should be submitted using the EasyChair http://www.easychair.org/conferences/?conf=ismis2012dmold .

Important Dates

- Data ready for download: June 20, 2012

- Workshop paper and result data submissions: August 10, 2012

- Notification of Workshop paper acceptance: August 25, 2012

- Workshop: December 4, 2012